Monday, 16 March 2009

Convergence 2009 - New Orleans

I noted a few presentations I found of interest notably one on my old bugbear performance issues, and the advice given I can only subscribe too, I just wish that they would consider doing some violence to the functional data model in order to enhance performance.

Specifically around inventdim which with the advent of the site in it has only grown as a performance issue, and the ledgerbalancetrans /dimtrans processing.

Another general matter is to review increasing performance by creating more and better history clean-up processes.

Today we have some processes to clean up the sales and purchase update histories, some concatenation of the inventsettlements and deletion of reversals. We could do more by providing deletion or archiving of the order tables (great for SO and PO performance) review getting rid of non critical journals (confirmations Picks and Packs).

Of course eventually we will have an archiving tool built in (promised for V6) and we will in september have one for all existing versions from V3 onwards, I somehow would not use the archival tools, unless forced too, before they are part of the standard Ax Code as only then can I tinker with it to make it work for me. From experience this is always needed to some extent with any new function made in Ax :-).

Anyhow will give links and more information later when I have more time to report on what I have gleaned from going through the convergence presentations.

Best Regards

Sven

Friday, 13 March 2009

Dynamics Ax V5 - 2009 Upgrading - Bugs

Anyhow I have just completed a process whereby we have migrated our current core application to Ax 2009, it was less of a positive experience than I had hoped.

During the upgrade touching the few objects that we had changed in our developments in Ax I noted some pretty silly little details and I also noted that one of my favourite performance bugbears which ostensibly had been fixed had not really been adressed.

I noted that during the upgrade process in the ReleaseUpdate Classes the old customer return codes are being mapped accross to a new table ReturnDispositionCode and in the process the return location is stripped from the data, and horror of horrors another method then simply deletes the information.

We would not have noted this if we hade not made a modification surrounding this process to allow us to add more handling around the returns process.

The funny part of all this is that the method allowing for a setting of the inventory dimensions based on the return code still exists.

I have nothing against the new returns functionality but I cannot accept a functional regression with no warning.

A further funny is the way this return functionality has been implemented it now creates shadow lines one or more which means if you had solutions relying on totalling the order lines you will be caught out by these new "hidden" lines.

As you know if you have read some of the earlier posts I am very concerned in general with performance in the solution as I believe this is not only an issue for large installations but also for all installations in general and in particular high volume ones.

I have touched on my metric as regards the summary tables LedgerBalancesTrans and LedgerBalancesDimTrans and the fact that in most cases these so called summary tables are not really a summary and therefore financial reporting is needlessly long.

In V3 and V4 the system creates upto 10 or upto 20 entries per day per dimension combination in V5 this has been reduced to one per day per dimension with a transient (or vector) one made temporarily during the update for each stream doing an update.

This off course reduces the number of transactions but not really that significantly by dimension, as in many of my real world cases the dimensions are strongly used and therefore there were not that many transactions for any one day & dimension combination. We have gone from 80% of transaction mass in the so called summary to 50-60% which in my book is still too much.

I hold out for having one transaction / account / dimension per financial period. This would really improve summary reporting performance.

Anyhow more about this and other V5-2009 matters later.

Take Care

Sven

Saturday, 28 February 2009

Upgrading to the Next Version of Dynamics Ax

This part of an upgrade project can from experience take between 10% of initial development effort all the way up to and including 100%+ of the initial dev effort depending upon what and how modifications were done.

The 100%+ example list of do not do's are :

-:

Changes to std BaseEnums sequence and related code, f.ex. I had a customer / consultant precede me on a case where they decided it would be a good idea to change the salesorder type enumerated type and add some new types in the middle of the list :-(. Upgrading this was an unnecessarily big job if they had just created them at the end with a big gap in the enum it would have been much less of an issue anyhow.

-:

Duplication of classes and calling these duplicated classes instead of the standard class, the problem here is that the layers mechanism of resolving / helping upgrade is negated and one is forced to export the class and do a manual external comparison.

-:

Writing code in unusual places, that is in screen buttons, on the datasource of screens, in the datasource fields on screens.

-:

Changing the standard data structure relationships in data as well as classes.

Partial list ends here TBC

Take Care

Sven

Friday, 20 June 2008

Performance Issues Resolution in Ax Part II

However as I am lazy I will simply try to migrate the code and tell you more afterwards.

Noted some interesting changes in V5 as regards multi threaded execution of processes using the new batch service.

Will report back on this later in more detail.

/Sven

Wednesday, 28 May 2008

Adding a storage Dimension (Inventory Status)

In order to somewhat help in this regard I have made an example project with the addition of a new Inventory Status table.

The Chinese Localization does not pose any issues as such as no elements touched are touched by the LOS layer where it resides furthermore none have been touched by the SYP either. I used a VPC I had onhand and it happened to have this installed.

The Chinese Localization does not pose any issues as such as no elements touched are touched by the LOS layer where it resides furthermore none have been touched by the SYP either. I used a VPC I had onhand and it happened to have this installed.I have added the standard sales and purchase status codes in the Inventory Parameters screen

For a full implementation where they are instead added as parameters on the customers brought to sales and on the suppliers brought to purchase, with a group on the item table defining what statuses are allowed for any given item please look to a later release which will be released given time and will include other innovations as well to develop the thoughts into a more useable module. This is more done in the spirit of sharing :-) a work in progress.

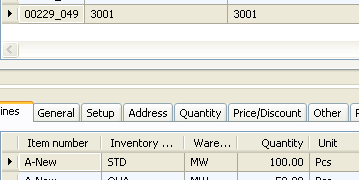

On a purchase order you can after importing the project and setting up an item with an Item dimension group that includes the item configure purchases to send items to any inventory status.

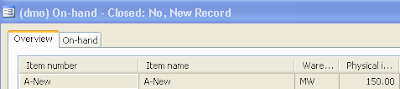

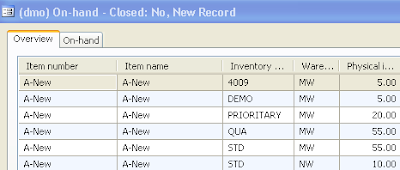

The inventory on hand screen now can show you.

The total on hand of your item or the broken down on hand qty.

The total on hand of your item or the broken down on hand qty. And you can now see the main purpose of this modification. It is to allow you to manage stock logically without using the warehouse for the purpose.

And you can now see the main purpose of this modification. It is to allow you to manage stock logically without using the warehouse for the purpose.

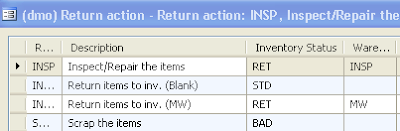

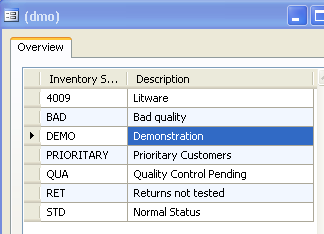

We can now create reject stati instead of having to create reject warehouses we can add a status to the return reasons screen and have our returned goods in the normal warehouse but with a flag indicating that they are returns.

Or even a flag indicating that they are unusable (no control of this is done that means you can still sell this stock though you have to choose it to do so)

You can also use the new status for things other than Quality related issues, you can use it to denote stock you have lent out for demonstration purposes f.ex.

Or stock that you have allocated to specific customer categories, or even specific customers.

Giving an on hand picture more like this one.

With the categories being configured as follows

With the categories being configured as follows

All up to you to configure but it opens up quite a number of possible trade related uses for not a lot of coding changes.

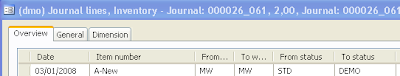

You do however wind up doing a lot of transfer journals when you want to change the status of your inventory, fortunately they are reasonably simple to do.

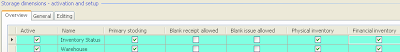

Another thing you must do, depending on what you want to use the added dimension for, is to configure the inventory dimensions with this as one that has the primary stocking flag set and probably also the physical and Financial inventory flags set.

I will not go into what all the flags do but let you use the standard documentation to help you determine how you want to use the new dimension.

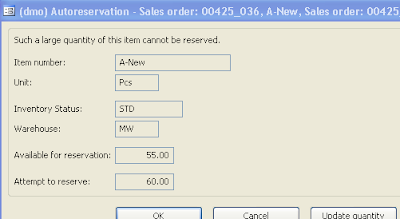

All the standard sales related restrictions immediately apply.

F.ex. the reservation screen knows to limit orders against the not available status, which is quite neat and nifty considering the few lines of code involved.

The above is by no means a complete documentation of the possibilities of this little modification nor is it to be construed as a limiting factor on what you can do with this modification.

Now comes the less amusing parts of this:

Note that by downloading and or by importing the linked code you are accepting the licensing conditions, if you give the code to anyone else you must also apprise them of these licensing conditions and they must agree to them prior to using the code.

The full conditions are the ones given by the OSL http://www.opensource.org/licenses/osl-3.0.php in this link which basically allows you to do anything including use it commercially.Monday, 26 May 2008

In search of Performance (Finance this Time)

This has important performance impacts on reporting when you use financial dimensions actively.

Normally you would expect to see a factor of reduction in number of transactions going from LedgerTrans to LedgerBalanceTrans and DimTrans, in practical use I see that most of the implementations I visit the relationship is more like a 10% reduction in DimTrans and 20% in BalanceTrans which is not much and almost makes these tables almost redundant.

In any case the advantage in performance using these tables rather than the LedgerTrans directly for reporting is not very big.

An easy means of seeing whether you have this issue is to configure your chart of accounts to show the current balance, if the display is jerky and slow which is the case very often it means the level of summary provided by the LedgerBalance View on the LedgerBalanceTrans is not sufficient to allow for a speedy summary of the ledgerbalances to date.

Another is to review the number of rows you have in LedgerTrans and in LedgerBalanceTrans & DimTrans respectively if the later are much smaller the optimisation is functional in your case if not you will eventually have a slower system as regards reporting.

What I would suggest is to ensure that it is possible to have a level of consolidation in the Balances so that they are reasonably fast for reporting purposes of course this means that we will slow down posting slightly however I believe the gains in reporting justify the expenditure :-).

/Sven

Saturday, 24 May 2008

AX 2009 or V5 goes RTM

This is the first version of Ax where all of the core development has been done in Redmond, it will be interesting to see where things go, some sources say the intention is to remove Morphx (the internal Ax dev language and dev environment) and replace it with a .Net dev environment. This may proove harder to do than they realise as all the installed base would rebel at having to re-write all their customizations completely, not to say that the job that MS and it's partners would be faced with would be simpler.

What does this mean for customers well it means AX 2009 will be out and sold over the summer as the time frame from RTM to sales is usually very short.

Anyway here's to Ax 2009 hope the launch and the program are sucessfull.

/Sven

Friday, 2 May 2008

Performance and Inventdim PII

I have in several instances tried the problem is the sheer volume of information that the SQL db is asked to manipulate.

Concrete example, the customer had built up a database of 24 million inventory transactions, there were 2,5 million lines in inventdim, inventsum had 6 million lines, the only active dimensions were the configuration on the inventory side and the warehouse, location and palett on the storage side. No serial numbers or Batch numbers so reasonably limited usage of inventdimid's. They used FIFO costing so inventsettlements were not too bad around 16 Million.

We ran a valuation report as at 3 months in the past. The report was unable to complete after 100 hours of processing time. The server was a quad processor with 32 Gb of RAM and a dedicated 15 K RPM raid 0+1 array with 24 disks in it, no penny pinching there.

The problem lies in the volume of data.

A consultant that helped design the above data model came and installed his solution which basically takes the data from inventtrans + Inventdim + inventsettlement and creates a flat table from the result. 12 hours of processing time. Afterwards the valuation report was printed in less than 10 minutes.

I think the above illustrates my problem with the current data model, having said all this many customers will never notice the issue as they do not have huge amounts of transactions. But as we all know over time systems grow and well this one does not have an archiving tool :-).

/Sven

Wednesday, 26 March 2008

Dynamics 2009 First Impressions

Likes:

The Version control built into Ax

The workflow wizards in almost all modules

The Expense module (finally)

Dislikes:

The Site addition is not really well thought through as it adds another inventory dimension and the companies mostly needing this are heavy inventdim users so this will have a big performance impact on all inventory reporting

The new apparent sub menu in the display window

The new colours in the TAP3 version seem a bit well I hope they are not the final ones

More to come

Take Care

Sven

Sunday, 16 March 2008

Performance and InventDim

In the product developped before called XAL the inventory dimension fields were present in the tables concerned that is we had the Warehouse as a field on the equivalent to the SalesLine, CustInvoiceTrans, InventTrans etc etc.

Finally this approach allowed for really huge datastructures with simple index based group by type queries and simple index constructs. Compared to the forced joins we have in our current inventsum sphagetti code especially with the huge number of inventdim records when using Batch or Serial numbers.

What would I like to see :

1. Delete Inventdim as a table (whew what a performance boon that would be)

2. Create an Array field like the finance dimensions Called InventSKU with three fields Config, Size and Colour

3. Create an Array field InventStorage with three fields Warehouse, Location, Pallet

( add a fourth one called Site for version 5 compatibility )

4. Create an Array field InventCondition with three fields Batch, SerialNumber, and Condition

I only introduced one new field in the above as compared to V5 and two new fields V3 and V4 (V5 Adds one)

5. Add the above array fields to all tables containing inventdimID, I realise that this means 2 times the fields for the transfer table and will cause a lot of code changes :-)

However the performance improvement in large scale installations !!!!

Also the ease of adding new Inventory dimensions !!!!

The simplification of inventsum logic !!!!

Take Care

Sven

Thursday, 21 February 2008

How to test for a methods implementation

After a little bit of searching I came to the sysDictClass which implements a method that enables you to call a method of a class.

This Method is called InvokeObjectMethod it accepts a classid and a method name as a string parameter and invokes the method, if the method does not exist it will return an error.

Here is the complete Method code

public static anytype invokeObjectMethod(Object _object, identifiername _methodName, boolean _tryBaseClass = false)

{

DictClass dictClass = new DictClass(classidget(_object));

DictClass dictClassBase;

DictMethod dictMethod;

int i;

;

for (i=1; i<=dictClass.objectMethodCnt(); i++)

{

if (dictClass.objectMethod(i) == _methodName)

{

dictMethod = dictClass.objectMethodObject(i);

if (dictMethod.parameterCnt() == 0)

{

// invokeObjectMethod is listed as a dangerous API. Just suppress BP error;

// CAS is implemented by DictClass::callObject.

// BP deviation documented

return dictClass.callObject(_methodName, _object);

}

throw error(strfmt("@SYS87800", _methodName));

}

}

if (_tryBaseClass && dictClass.extend())

{

dictClassBase = new DictClass(dictClass.extend());

// BP deviation documented

return SysDictClass::invokeObjectMethod(dictClassBase.makeObject(), _methodName, _tryBaseClass);

}

throw error(strfmt("@SYS60360", _methodName));

}

As you can see the code has pretty much the functionality required to do the job, it cycles through the methods on a given class and tests for the existence of the wanted method by comparing the names.

So the above requires little change to create the below code:

public static boolean xxxObjectMethodExists(Object _object, identifiername _methodName, boolean _tryBaseClass = false)

{

DictClass dictClass = new DictClass(classidget(_object));

DictClass dictClassBase;

DictMethod dictMethod;

int i;

;

for (i=1; i<=dictClass.objectMethodCnt(); i++) { if (dictClass.objectMethod(i) == _methodName) { return true;

}

}

if (_tryBaseClass && dictClass.extend())

{

dictClassBase = new DictClass(dictClass.extend()); // BP deviation documented

return SysDictClass::xxxObjectMethodexists(dictClassBase.makeObject(), _methodName, _tryBaseClass);

}

return false;

}

I have in the above setup indentations etc but they are not working but the code should work if you just copy and paste it in.

Why did I need the above well I am working on a replacement of the Batch processing in Ax as I have too many operational problems with the Batch processing and I have decided it is time to create a Batch processing framework that can be called from the OS through COM and that can be used to execute any class in the system.

So I needed to know whether a class implemented a dialogmake method in order to know whether I can call one. I want to be able to execute also runbase classes not only runbasebatch classes :-).

But if you have another need feel free, plus if you have a better way also feel free to say so

Take care

Sven

Monday, 18 February 2008

Refreshing screen Info PIII

And as is often the case I found the solution in someone else's code :-).

Columbus have developed an integration tool called Galaxy, and this tool imports data into a container and then presents that data using a mask in a form, the presentation top labels have exactly the same requirements as my size / color grid screen.

That is as the user moves down and selects rows the labels at the top should change.

So what is the answer ?

Well it may seem strange but the following works.

Set the first label field to ' ' that is:

QtyGrid1.label(' ');

and then proceed and set the others.

I do not know what flag is activated or set of by the fact of setting the label field to a 32 ascii however it forces a re-draw and thereafter everything works as expected.

As I lifted this from a version of Galaxy intended for V3 I believe the fix works in both I can certainly confirm that it works in V4.

Have fun

Sven

Long Break

Take Care

Sven

Thursday, 23 August 2007

V4 Service pack 2

Even in Service Packs !

The Country as it was in V4 and SP1 is now AddressCountryRegion which is consistent with the rest however .....

Anyhow enough said I have not found any others, so far.

/Sven

Correction:

Palle commented that as far as he knew the Country had been renamed in V4, and he is right, what happened is that the upgrade process from V3 allowed the object to retain it's old name all the way through to SP2 but no further.

So what I thought was an SP2 issue was in fact an issue related to the upgrade process.

Sunday, 12 August 2007

Support Policy bugbear

Version Release date Support ends

Microsoft Dynamics AX 3.0 SP4 May 17th, 2005 April 10th, 2007

Microsoft Dynamics AX 3.0 SP5 February 28th, 2006 July 8th, 2008

Microsoft Dynamics AX 3.0 SP6 May 1st, 2007 January 13th, 2009

Rule is :

(Subsequent SP Release Date + 1 Year + 2nd Tuesday at start of next new quarter)

The issue is the time it takes for the customers to actually upgrade their versions is much longer than the interval between SP's and the issues caused by implementing the SP's sometimes outweigh the advantages gained. Especially as the SP's do not only contain corrections but also slip stream functionality releases, of course this is not the intention however MS should recognize reality in this regard.

The rule previously was that MS supported the versions 5 years from release date of the last SP. The latest policy change is obviously intended to incite customers to expedite their updates from V2.5, 3.0 to V4.

Unfortunately from my experience this process is far from being a shoo-in or in other words simple task, and will require the expenditure of a significant amount of time to effect for each of the companies that envisage this upgrade.

As the V4 upgrade did not contain a large number of functional changes, many customers will probably choose to wait for V4.1 or 4.5 now renamed V5.0 to do their upgrades as this is supposed to be the one containing a large number of functionality extensions.

In other words

V4 = Technical oriented upgrade ( new enforced AOS, AOT additions )

V5 = Functional oriented upgrade ( no new tech but lots of functionality (promised) )

V5 I have just been told may be delayed to sometime end 2008, probably even 2009.

Now many customers will be in a bind, pay the consultancy costs of doing an upgrade from V3 to V4 with no business justification based on features or wait for V5, but as a consequence live for som time outside of the support conditions recently imposed by Microsoft.

I believe it would be beneficial for MS to provide support for a longer period of time of the previous versions and service packs what do you think :-)

/Sven

Friday, 20 July 2007

Refreshing Screen Info in V4 PII

Great, well I have a call in and I am trying all sorts of detours in order to get the form to update the column headers, so far the only reliable one is for the User (!) to click on the scroll bar below the grid.

A single click is enough to redraw :-(.

I have not tried to move the screen back into V3 in order to see how V3 will cope with it, it seems a rather retrograde step to undertake especially as it is meant for a V4 implementation.

Anyhow will post on this subject again later.

Take Care

Sven

Thursday, 19 July 2007

Performance issues resolution in Ax Part I

Mostly when you have problems in Ax, as in most other ERP systems :-), it is related to some sort of contention that is concurrent access to key central information, or alternatively to large quantities of data being used/created.

In most of the instances I have seen the quickest fix on SQL is always just adding more memory, not that I am a big advocate of throwing money at the problem but well that is normally the cheapest solution these days.

In SQL 2000 and 2005, the main issue is centered around one fact, lock escalation, basically your server can if it deems to be running out of memory decide to start locking not each row but a page (unit of storage used to contain multiple rows in the database) and even the whole table. When this happens you tend to get a situation where everyone is waiting for each other.

Picture Picadilly circus bus stop on a busy afternoon where you have lots of busses all coming in to pick up / drop off passengers and having to wait for each other and thereby causing havoc for other traffic, that is the best physical representation.

In Ax say the CustTrans table is concerned or InventTrans then as one process is using it another 5 different ones want to use it too, they cannot as the first is blocking and they use other tables which they then block for others thus making a traffic jam in the database.

SQL decides to do this lock escalation when it has used more than a certain percentage of the available memory that it has to manage locks, thus if you give it more memory to play with it is less likely to do lock escalation and therefore to create the traffic jam.

In SQL 2005 some new functionality has been added concerning Index Lock escalation, however this is hard to add in Ax as information on the indexes, as these are created by the syncronization engine. You would have to add the information by hand on each index :-( after creation and then redo this everytime Ax runs a syncronization.

Additionally as you can see in this KB where means of reducing the lock escalation are discussed it is possible to switch of using the startup flag -T1211 however the resulting error condition of it running out of memory is not pretty.

One option that seems intriguing is the option of making an incompatible higher level block

BEGIN TRAN

SELECT * FROM mytable (UPDLOCK, HOLDLOCK) WHERE 1=0

WAIT FOR DELAY '1:00:00'

COMMIT TRAN

in Ax the tables classically affected are a series of well known ones, the problem with the above in Ax (as well as most other ERP's) is that one hides others etc. If f.ex. you resolve your issues on CustTrans then perhaps CustTransOpen or CustSettlement becomes the problem etc etc so this is often not a solution that we can use to resolve our escalation issues, also unless we do the locking for the whole business day we do not handle all the cases :-(.

Changing our queries is the next possibility mentioned to create smaller transactions.

Well, that is something that is possible in a limited fashion, you will have to clarify functionally with the business whether they can accept the consequences, f.ex. not make sales orders with moe than xx lines in them. Not do recap invoicing, etc etc.

What you can do is use the system to help you detect where you have problems and also use the system to dissect exactly the problem is being originated from.

A number of tools are availeable to you, the main one which I recommend all Ax sysadmins to get familiar with is the SQL tab under the User Options of each User.

This provides an invaluable help in order to get information about what parts of your system are not performing as expected.

What I suggest all sysadmins to do is to as a minimum activate the SQL tracing set a threshold of perhaps 2000 (2 secs it is in milliseconds) and to store long queries and deadlocks in the Table (database).

This will store in the database (accessible under Administration/Inquiries/Database/SQL Trace log the detail of every time a query took more than 2 seconds to execute, including the complete call stack of the process when this happened. You can even jump directly to the offending code and view / edit it.

With a little reflection it is always possible to optimise such queries and to thus reduce your performance issues, it is just a question of thinking about it clearly.

In V4 a few impediments have been placed on your way to having this level of information you have to enable Client Tracing on the AOS instance otherwise the table will be empty as the process will not be called.

Also in V3 KR1+ you have an extended toolkit that you can install, after installing the KR which you should have acces to through customersource or partnersource this allows you to control in detail what is being tracked and also allows you to log blocking behaviour, any process waiting more than 3 seconds on another will automatically log the fact that it is being blocked and thus you can see the effect that the "picadilly peak hour jam" has had on your system.

The good thing about logs is you can be factual about the increase / resolutions that you apply to your system.

Unfortunately MS have not seen fit to extend the above tracing extension into V4 probably because it was a separate development path. I have I think now managed to migrate most of it onto a V4 SP1 based system, the only issue I have is with the blocking monitor, I need to work a little on the SQL side to find out what I need to do exactly in order for it to operate.

Once it is complete I will post it here for you.

Take Care

Sven

Monday, 16 July 2007

New Head of MBS Good or Bad ?

Now we have Kiril Tatartinov as the new head of MBS.

He is to replace Satya Nadella who left in March 07.

Tami Reller was interim Manager, and is also leaving the MBS sphere, to take on new roles elswhere in MS.

Doug Burgum who was the manager for a long time left as recently as last year.

All in all a rather turbulent leadership of a division that now accounts for more than 1 Billion of Bill's yearly revenue.

Personally I have no truck with any of the above characters, the only issue I have is that it seems that MS has not really understood that they need to lead this thing, and that changing managers every 3-6 months is not a positive message to send.

I had hoped that Satya taking over was a sign of them wanting to go for the Dev environment type of tool, seeing as his background was solidly anchored in the Development tools team at MS.

However he / it was not given a chance to mature, and he was moved probably because the business did not show sufficient momentum or because he did not feel it was important enough for him.

I do not see Kiril as a strategic choice, I could be very wrong of course what do you think ?

I see him more as yet another stop gap solution for the role.

Why was Tami not given longer to try to perform ?

So is this the begining of the end ? Are MS looking to sell off the division ?

Some were already speculating when Satya was moved, have a look here at Josephs blog. (comments)

There are over 3000 people in the MBS division however, and some are well integrated into MS and cross pollination of job roles is something MS excels in (The average time in job is around 18 months :-) ). I think it very unlikely that they would sell.

Take Care

Sven

Sunday, 15 July 2007

Dynamics Ax 4 SP2

Today the AIF is purely an Insert or Send style interfacing tool.

Of course this is not my only gripe nor others for more go to Dave's blog on the subject he is asking for anyone having live implementations to go and tell him what they have live.

Unfortunately I have so far only got a Proof Of Concept up and running so I do not have any live flows yet. However we will have when we can trust and ruggedize the application probably somewhere around 20 Companies doing SO-PO type flows with automated corrections at several levels.

The proof of concept shows it is possible but with a lot of code changes to ensure that the AIF is operational at all times.

We had issues with the COM connector (.NET) we had issues with the non existent update methods etc etc.

Anyhow more on this later but please follow up on Dave's blog and make him feel less alone on the forefront of this issue.

Take Care

Sven

Tuesday, 10 July 2007

Refreshing screen info in V4

In V3 if you want to update a screen f.ex. and refresh it in a uniform manner then you called

element.lock()

/*

did all your manipulation of screen elements

called redraw or refresh (if you needed to re-read the data)

*/

and

called element.unlock()

The functions are still there but they currently seem to do nothing, I suspect it is related to MS teams taking over some of the screen painting functionality and they are probably using some other MSFT code package to update screen information. But this leaves me pretty much in limbo as I have to get the user to activate the scroll bar to change the screen elements as redraw seems to have been de-activated as well.

Anyone else noticed the above and more to the point has anyone found a workaround to this annoying little bug ?

I will share here if I find one, my google help engine has not worked :-), I have not seen it commented publicly anywhere yet. And on the MS help the only discussion thread I could find was from 2004 and a bit off topic.

Take Care

Sven